For many large enterprises, the mainframe and z/OS platform have been the backbone of business-critical applications for decades. Huge volumes of data still reside in Db2, VSAM, or IMS – a result of years of continuous operation. The corresponding applications are often written in Cobol, but the internal expertise to maintain this technology is dwindling. As a result, pressure is mounting to move on from the mainframe and modernize the IT landscape.

But this transition poses significant challenges:

- How can legacy data be reliably migrated to new systems?

- How do you handle the complexities and special characteristics of various data sources?

- How can teams effectively test new applications – using current, production-like data?

- How is compliance with data protection regulations ensured throughout the process?

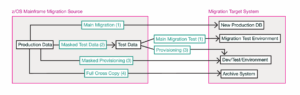

Data Movement Pathways During Migration

As organizations shift from the mainframe to modern platforms, multiple data movement pathways are essential to support both the migration process and the diverse needs of development and testing teams. While the core migration path typically focuses on populating the new application environment with business-critical data, the practical requirements of large projects necessitate a broader approach, encompassing dedicated flows for secure testing, flexible provisioning, and long-term archival.

Main Migration Path

The principal data stream involves the one-time or phased migration of production data from the z/OS source system into the target application platform. This path establishes the foundation for the new system, initializing its operational database with current, validated information. While a simple transfer of the data itself can be accomplished using a cross copy, organizations often implement the principal (‘main path’) migration of production data using bespoke, application-specific or in house developed tools, especially when extensive restructuring or transformation of the data is needed.(1).

In practice, many organizations choose to split the migration into phases, first replicating data to the new platform and then gradually refactoring structures to minimize risk and complexity.

Provisioning Masked Test Data

To ensure the integrity and security of the migration process itself, a separate flow is required for provisioning masked or anonymized test data (2) directly from the migration source. This allows teams to safely develop, refine, and validate migration routines without exposing sensitive production information. Such masked datasets are crucial for stepwise testing and for regulatory compliance during development phase.

Cross-Copy for Test and Development Environments

Beyond the initial migration, ongoing project activities demand flexible mechanisms for copying subsets of data—from both production and non-production (test) systems on z/OS—into the target environment (3). These cross-copy processes enable developers and testers to work with up-to-date, production-like datasets in the new platform’s development and QA environments, facilitating robust validation and iterative refinements without interfering with the main migration stream.

Comprehensive Archival Path

Finally, a full system copy is often required for archival purposes. This involves creating a one-to-one replica of the original legacy environment—such as loading the entire dataset into a modern database like PostgreSQL—as a historical snapshot prior to migration (4). Creating a one-to-one replica of the legacy data in a modern database serves primarily as a data archive for audit, reference, and post-migration corrections—rather than for running legacy applications. This allows continued data access via queries if needed, even after the main migration and potential restructuring of production data.

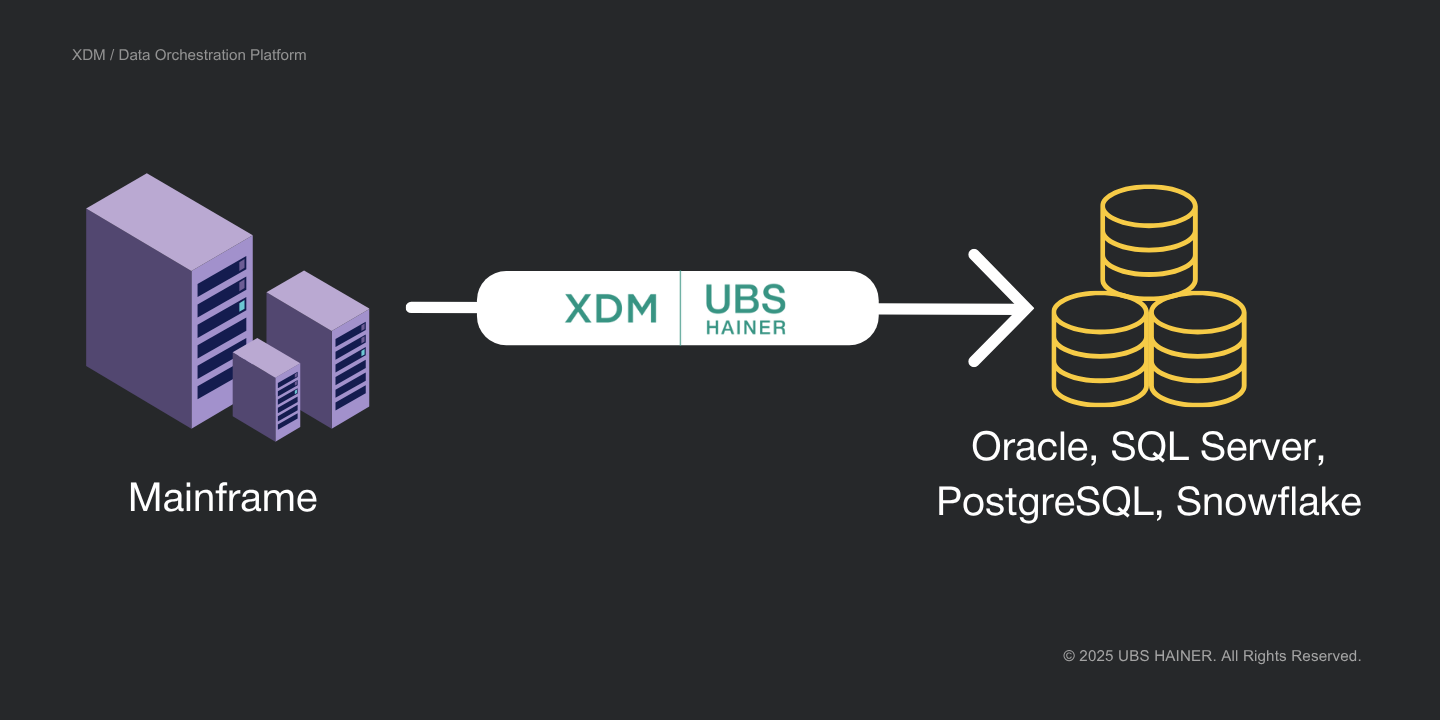

XDM as a Solution for Data Migration and Test Data Management

XDM offers clear solutions to simplify migrations and ongoing test data provisioning:

- Cross-Platform Data Extraction and Loading

XDM natively supports mainframe sources like Db2 z/OS, VSAM, and IMS, as well as today’s target platforms – Oracle, SQL Server, PostgreSQL, Snowflake, and more. Data can be moved across system boundaries, with automatic conversion of formats and data types, minimizing manual interventions and project risk. - Automated Handling of Structural Differences

Legacy and modern systems often differ at the structural level: new or missing fields, renamed tables, altered data types. XDM detects such differences on the fly, generates target DDL scripts as needed, and applies mapping rules for renaming tables and columns,or transforming data. Even modern target platforms, where data storage is split into multiple databases can be accommodated seamlessly. - Integrated Data Masking and Anonymization

Using real production data in test environments often isn’t allowed – especially in regulated industries like insurance, health care and banking. XDM includes a wide range of pre-built data masking algorithms (for names, addresses, account numbers, etc.) that can be flexibly applied, ensuring compliance with data privacy regulations. Alternatively, XDM can generate synthetic yet realistic test data using AI, further supporting data protection. - Workflow Automation & Self-Service Test Data Provisioning

XDM enables you to model and automate end-to-end provisioning workflows: from data selection and masking, to delivery and validation in the target environment. Business users and testers can leverage user-friendly web interfaces to order fresh test data as needed, without any mainframe expertise. - Monitoring and Auditability

Every migration and provisioning step is logged and reported on in detail. Issues or anomalies become visible early, and comprehensive audit trails show exactly what data was transferred, when, and how – including any transformations applied.

Conclusion

Migrating from z/OS to modern platforms is a complex endeavor, but intelligent tools like XDM can drastically simplify two of the hardest parts: reliable data migration and consistent, compliant test data delivery. This empowers enterprise operations to innovate and deliver real business value while ensuring that new applications are robustly tested, fully compliant, and match the outcomes of legacy Cobol programs.